Artificial intelligence (AI) is revolutionizing the technological landscape, with models like ChatGPT at the forefront. These advanced systems rely heavily on data and algorithms, pushing the boundaries of machine learning and computation. However, a significant challenge lurks beneath the surface: the von Neumann bottleneck. This bottleneck stems from the traditional architecture of computing systems, which separates memory and processing units. Consequently, data transfer speeds between these components can hinder the overall performance of AI systems. To address this issue, researchers at Peking University led by Professor Sun Zhong have introduced a ground-breaking approach known as the dual-in-memory computing (dual-IMC) scheme.

In traditional computing architectures, the von Neumann bottleneck arises from the limited bandwidth for data movement between memory and processors. As the volume of data grows—fueled by the rapid expansion of technology—this limitation becomes increasingly problematic. Matrix-vector multiplication (MVM) operations are particularly affected, as they form the backbone of neural network calculations. A neural network, inspired by the human brain’s structure, contains extensive layers of processing that require significant data manipulation. With the current demands of AI-powered applications, overcoming the restrictions posed by the von Neumann architecture is essential for achieving progress in computational efficiency.

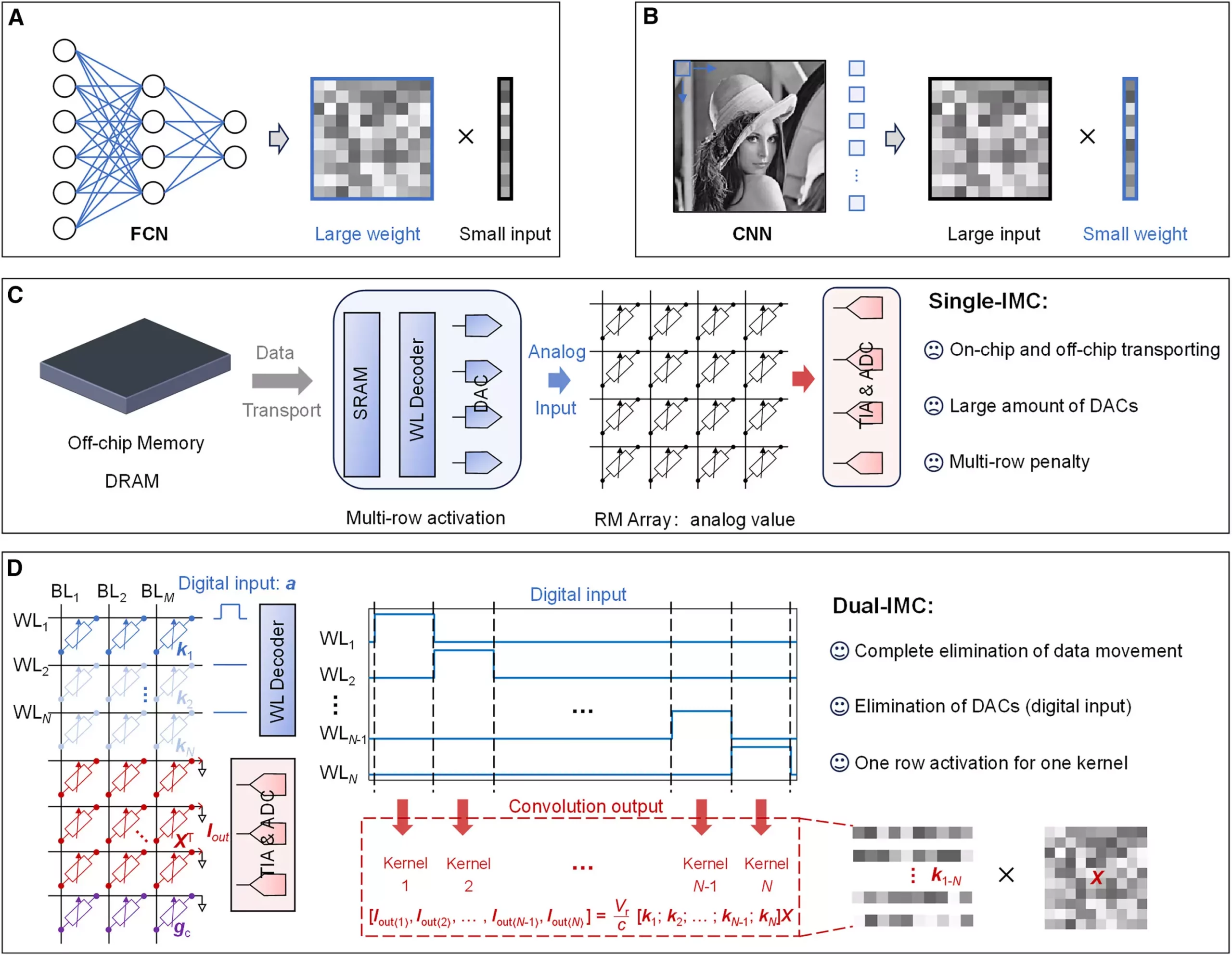

To mitigate the effects of this bottleneck, the existing solution is a single in-memory computing (single-IMC) scheme, which allows neural network weights to be stored on a memory chip, while external inputs are processed separately. However, this approach comes with its drawbacks. The transition between on-chip storage and off-chip data retrieval introduces delays, consuming both power and chip area. Additionally, the need for digital-to-analog converters (DACs) further complicates and enhance the operational cost. These limitations highlight the urgent need for a more innovative solution, which the dual-IMC scheme endeavors to provide.

The dual-IMC scheme proposed by Zhong and his team represents a paradigm shift in how data operations are performed in the realm of AI. By storing both the neural network weights and the input data within the same memory array, this method allows operations to occur fully in-memory. This radical approach bypasses the cumbersome need for data movement between separate memory types, addressing a core issue faced in conventional computing frameworks.

Testing their dual-IMC scheme using resistive random-access memory (RRAM) devices, the researchers demonstrated significant improvements in both signal recovery and image processing tasks. The implications of their findings suggest a pathway toward highly efficient computing architecture capable of meeting the increasing demands for AI processing.

The research highlights several key advantages of the dual-IMC scheme compared to its predecessor. Firstly, fully in-memory computations lead to remarkable efficiency gains, conserving both time and energy typically lost in the transfer of off-chip dynamic random-access memory (DRAM) and on-chip static random-access memory (SRAM). Secondly, by eliminating the need for data movement, computing performance is optimized, which can substantially enhance the speed of complex data operations. Furthermore, the absence of DACs translates into lower production costs, contributing to economies in chip area, power usage, and latency.

As the demand for faster and more efficient data processing continues to grow in our increasingly digital world, the discoveries stemming from this research hold great promise for the future of computing architecture. The dual-IMC scheme could usher in a new era for AI, paving the way for breakthroughs that were previously deemed unattainable. If these improvements can be widely adopted, we could witness transformative advancements not only in AI but across all domains that rely on intensive data processing.

The work conducted by Professor Sun Zhong and his team points to a brighter future for AI and computer science by addressing one of the most pressing limitations of current technologies. By implementing innovations like the dual-IMC scheme, the barriers imposed by the von Neumann bottleneck may soon be surpassed, leading to a new landscape of computing efficiency and capability. As researchers continue to explore and refine these concepts, the potential for groundbreaking applications in artificial intelligence is boundless.