In an era where technology continually reshapes our understanding of everyday tasks, one might find themselves pondering whether an app could simplify the process of selecting the best fruits or vegetables in a grocery store. Surprisingly, while human beings excel at assessing food quality based on slight nuances and external conditions, current machine-learning models often lag behind. However, new research conducted at the Arkansas Agricultural Experiment Station indicates a promising direction where machine intelligence could be harnessed to augment our food selection experiences.

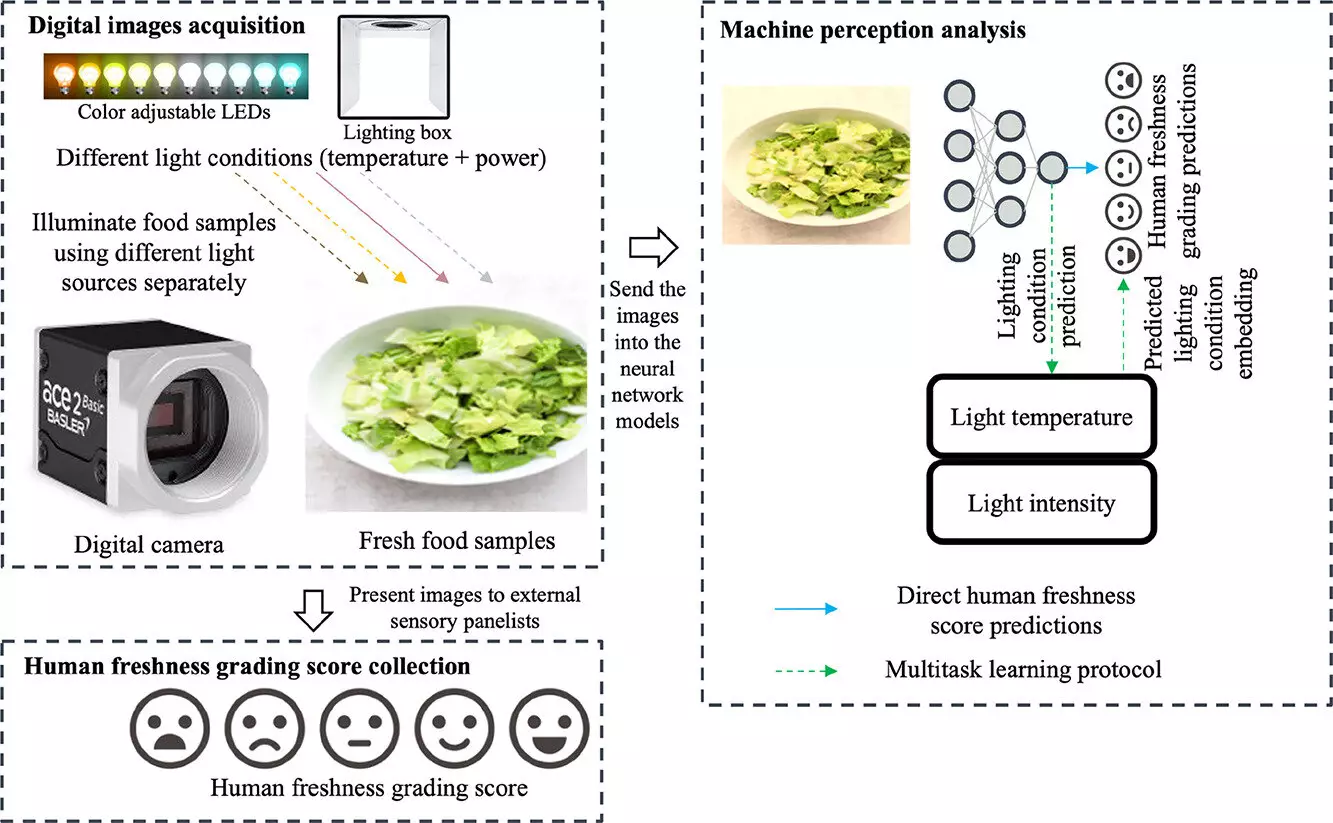

Human perception is inherently complex, shaped by numerous variables, including lighting and color. This variability can lead to inconsistencies, particularly when evaluating food quality. Notably, a study led by Dongyi Wang, an expert in smart agriculture and food manufacturing, demonstrates how human input can refine the predictive abilities of machine-learning models. By analyzing how people perceive food quality under various lighting conditions, researchers aim to develop systems that are not only capable of evaluating food quality but can do so with greater precision and consistency compared to human assessment alone.

Wang, a key figure in this research, emphasizes the need to understand human reliability in perception as the foundation of improving machine-learning algorithms. By acknowledging the discrepancies present in human evaluations, researchers aim to cultivate models that learn from these variations rather than replicate them. This integration of human sensory data into machine models reveals a transformative potential in food quality assessment.

The findings of the study are compelling. By integrating human perception data—derived from sensory evaluations of lettuce images taken under varying lighting conditions—researchers were able to reduce prediction errors in machine-learning models by approximately 20%. This improvement is substantial when considering that traditional models often overlook the impact that these environmental changes have on human perception.

Underpinning this study is a sophisticated examination of Romaine lettuce. Researchers gathered data from a diverse pool of 109 participants, who evaluated images based on freshness over a period of days. By utilizing an expansive dataset featuring diverse lighting and color scenarios, the study reveals that even small shifts in environmental lighting can have a significant influence on the perceived quality of food. Such findings challenge existing methods that have primarily relied on simplistic color information and basic human labeling processes, highlighting a critical gap in current machine vision techniques in the food engineering field.

What sets this research apart is its novel approach to data utilization. The researchers employed well-known machine learning models that were able to process the same tomato images evaluated by the human sensory panel. This method aimed to train machine vision systems to emulate human perceptions more closely. As Wang highlights, artificial intelligence trained in this manner has the potential to transform not only the food industry but also various applications beyond it—ranging from jewelry assessment to quality control in manufacturing.

The interdisciplinary nature of the research contributes to its validity, drawing insights from experts in engineering, food science, and sensory analytics. The involvement of a dedicated Sensory Science Center fortified the research with robust evaluative techniques, ensuring that the conclusions drawn would have real-world applicability.

While the concept of an app that can guide us through the produce aisle remains somewhat aspirational, the groundwork laid by this study provides an exciting glimpse into the future of food selection. As this technology develops, grocery stores may find themselves equipped with insights on how to display food products more effectively, create better lighting conditions, and optimize overall presentation for consumer engagement.

Additionally, the implications are vast. Consumers could eventually rely on applications that not only guide purchases but also provide assurance that the food they select meets their quality expectations. In an age where transparency and quality assurance are paramount to consumer decisions, the intersection of machine learning and human perception could deliver a transformative experience.

Conclusively, the future of food quality assessment is evolving, as researchers like Wang and his team pave the way for a more reliable, data-driven approach to understanding food quality. Bridging the gap between human perception and machine capabilities may soon transcend beyond mere convenience, fostering a new paradigm of informed consumer choices in the food sector.