Accurate weather forecasting is a linchpin for numerous sectors within the American economy, impacting everything from aviation safety to agricultural planning. The inherent unpredictability of weather systems presents a challenge that necessitates sophisticated modeling techniques. Traditionally, weather forecasts rely heavily on rigorous physical equations encompassing thermodynamics and fluid dynamics. These conventional models are computationally intensive, requiring extensive resources often allocated to supercomputers to produce forecasts. However, as technology evolves, new approaches are surfacing that could redefine our understanding and prediction capabilities of atmospheric phenomena.

AI and Foundation Models: A New Era in Forecasting

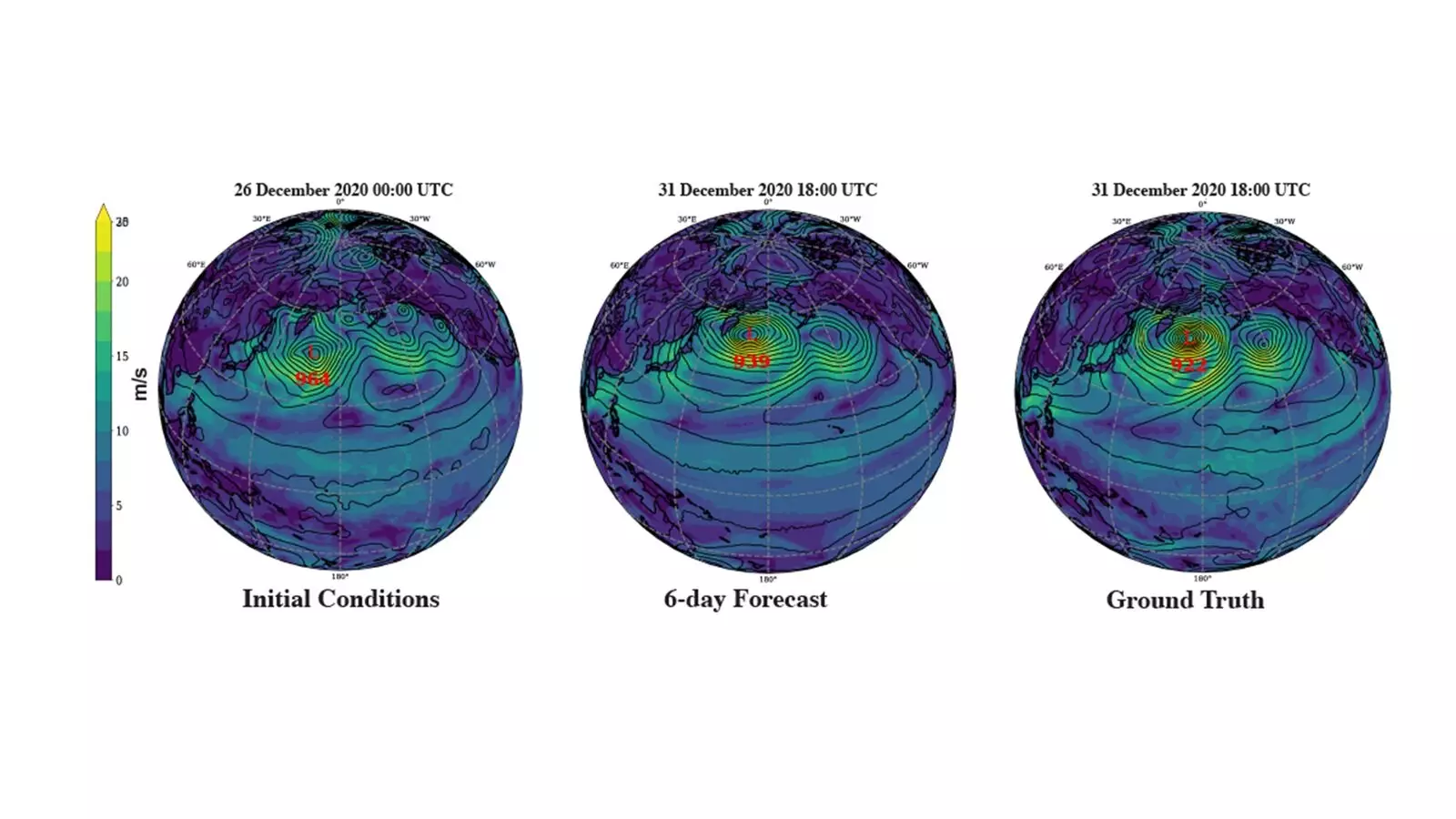

Recent efforts from notable tech giants like Nvidia and Google have set the stage for a paradigm shift in how meteorological predictions are constructed. Research at the U.S. Department of Energy’s Argonne National Laboratory, led by scientists like Aditya Grover and Tung Nguyen from UCLA, is exploring the efficacy of artificial intelligence models—specifically, foundation models—for weather forecasting. This innovative approach could potentially produce forecasts that are not only more accurate than existing numerical weather prediction models but also at a significantly reduced computational cost.

A distinct feature of these foundation models is their ability to leverage “tokens.” In traditional natural language processing, tokens represent words or phrases that form the basis of meaningful sentences. In the realm of weather forecasting, however, these tokens take on a visual form: small patches of graphical data illustrating various atmospheric conditions, such as humidity, temperature, and wind speed. As Sandeep Madireddy from Argonne aptly describes, this shift towards “spatial-temporal data” allows the model to grasp the interactive dynamics of weather variables in a novel and efficient manner.

Efficiency Meets Innovation

An intriguing result of this research highlights an ability to utilize lower-resolution data while still attaining comparable forecasting accuracy to traditional high-resolution models. Rao Kotamarthi, an atmospheric scientist at Argonne, emphasizes that the historical thrust towards higher resolution often comes at a hefty computational expense. However, the current methodology suggests that revolutionary insights can arise from reconsidering this established paradigm. By employing coarse resolution data combined with intelligent modeling techniques, researchers could achieve significant advancements without overburdening computational resources.

This finding cannot be understated, as it suggests a promising new direction for meteorological science—one that may enable faster processing speeds and real-time forecasting capabilities without the financial and environmental costs associated with sprawling data centers and powerful supercomputers.

Climate Modeling: A Greater Challenge

While the breakthroughs in weather forecasting using AI are encouraging, transferring these models to climate analysis poses an array of complexities. Climate modeling, which observes trends over extended periods, is compounded by the fact that the climate is no longer static; it is dynamically shifting due to anthropogenic factors like increased carbon emissions. Troy Arcomano, an environmental scientist at Argonne, highlights the challenge this creates: the constant evolution of climate data renders traditional statistical models outdated almost as soon as they are developed.

There’s a clear necessity for dedicated efforts toward utilizing AI for climate modeling, though Arcomano acknowledges that the private sector is more likely to focus on improving weather forecasting instead. This discrepancy points to a gap in funding and incentive structures for climate research compared to immediate weather-related outcomes, potentially hindering comprehensive progress in understanding climate systems.

The Role of Exascale Computing

The introduction of exascale supercomputers, such as Argonne’s newly implemented Aurora, stands to revolutionize the training of vastly complex AI models capable of high-resolution predictions. Kotamarthi explains that such advanced machines are essential for fine-grained characterizations of atmospheric behavior, emphasizing the importance of investing in cutting-edge technological frameworks to pave the way for deeper insights into whether and climate patterns.

This development is promising not just for immediate weather predictions but for long-term climate research as well. By enhancing our computational capabilities, we can empower our understanding of complex systems in ways that were previously unthinkable.

The recognition of Argonne’s research, illustrated by their accolade at the “Tackling Climate Change with Machine Learning” workshop, confirms the relevance of integrating AI into meteorological studies. As we move forward, it is clear that embracing these innovations will not only bolster predictive capabilities but also shape the future of our engagement with the atmospheric sciences in a rapidly changing world.